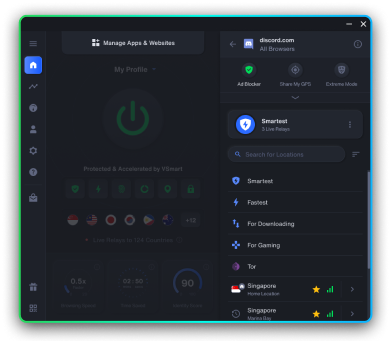

Bulletproof privacy in one click

Discover the world's #1 privacy solution

Coming soon

Coming soon

Coming soon

There’s no worse feeling than being an IT manager in the position of cleaning up after a hack. It might not even be their fault. Maybe it was an inside job, or it might have been the result of social engineering despite rigorous staff training. That doesn’t make it any less painful, however.

But the team needs to shake off that pain quickly: There’s work to be done.

Let’s try to answer the question of ‘what should a company do after a data breach', because having a plan of action is important. CISM guidelines break this into a four-step process, which we’ll follow in this article:

We’ll talk about what we mean by containment first.

Containment is always the first order of business. Take any impacted clients and servers offline. It isn’t time to immediately bring backups online… the same core flaw might be present in the archives. Shut down anyway for data to be exfiltrated from the company. That might include taking down Cloud assets, cutting WAN access, and the like. The active hack needs to be nullified before the next steps can be taken.

Now it’s time to hit the logs and see what’s intact. Intrusion detection and prevention systems (IDS / IPS) are the first places to look. Then the firewalls. Then routers and switches. Then individual systems. The goal is to find the source, the extent, and the likely initial impact of the breach. Remember: This is still a containment exercise. The purpose of the log analysis is strict to make sure that you’ve ended the active data breach from every feasible angle.

You’re also going to identify key machines and systems that need to be backed up forensically for further study. This is done for evidentiary purposes, but also so that the current state of the system can be examined at length later. If it self-destructs in the process, you’ll have a bit for bit copy to restore and examine again from a different angle.

Once the hack has been fully contained, it’s time to start the deeper analysis.

It’s time to figure out the damage. Assessment is done in two steps.

First, the most intact backups need to be identified and examined in a sandbox environment. They need to be cleaned, patched and tested to make sure that whatever happened isn’t going to happen again. That might mean temporarily pulling someone’s access if they were the target of social engineering. That might mean delving into the vulnerable services and either patching them appropriately or finding working alternatives.

The results need to be tested. Any automated tests need to be adjusted for any new systems and infrastructure. Automation needs to include implementing patches on new and old systems. Don’t ignore manual non-functional testing, it can find things that even well-trained AI can miss.

Second, while the team prepares a patched system to bring back online, the breach response officers need to assess potential harm. It’s an important concept. Given what was accessed, given what was or might have been exfiltrated, who is at risk? Is this strictly industrial espionage? Was it an attempt to expand a botnet? Was actual data stolen? Are customers impacted? If so, how?

When those questions are answered, immediate action must be taken to remove any further risk of harm. Any imposed restrictions, temporary disconnection from partnered systems, and limited user access can be explained later. In fact, that’s the next step. But first, do what you have to do to reduce the immediate impact of the hack and prevent as much harm as possible.

This is going to be tough, company-wide. Everyone from customer support to HR, to IT, to the media team needs to be ready for a flood of questions. Preparing a FAQ on the incident is a good idea.

Insurance and authorities are informed on a case-by-case basis. This is a financial and risk assessment decision, not an automatic step. Consult with the executives - they’ll need to make a hard decision, and quite quickly. This is where ransom demands might change the course of how everything else is handled, at least from a legal and financial point of view.

Impacted individuals usually include all company employees, at minimum. Particularly in cases of social engineering, everyone needs to be on high alert for repeat incidents, ransom demands, media attention, and the like.

If customers were even potentially impacted, they need to be informed. Anything having to do with personal data and account information needs to be treated with the utmost seriousness. Customers need to be advised to change passwords if they were potentially exposed. They need to be ready to check financial records, cancel credit cards, and secure their positions if any banking, credit, or debit information was exposed. They need to understand any change to their regular service. They need to be referred to the correct resources for however they were impacted: Government, internal, external, whatever would be appropriate and helpful.

Stakeholders, stockholders, partners, corporate partnerships, lawyers, and the like need to be informed as well. Anyone who relies on the service is up, anyone who will be financially impacted by the news, anyone who might be approached from a blackmail or ransom angle, and anyone who might be called upon for testimony need to be in the loop.

Refer to the company’s incident response, business continuity, and disaster recovery plans for any additional entities that need to be in the loop.

Now that everyone can take a breath, it’s time for the ‘post game’ analysis.

Everything is on the table, from practices that led to the breach, to systems that should have detected it, to gaps in understanding and talent that need to be filled. But importantly: This isn’t a time to assign blame to individuals. This is a time to shore up defenses.

Every suggestion is on the table if it would help prevent future breaches, and particularly if it would help prevent any immediate follow-up breaches by the same individual or by copycats. Blood is in the water. Once news gets out, IT security is going to be tested again, you can be sure of it.

Compile the suggestions for review by the senior staff, who will take into account the cost, effectiveness, and talent required to implement each solution. These should be processed quickly, identifying the best protection for the money and the most logical process changes. Once approved, any required spending requests (including contractors, overtime, etc.) need to be passed up the line immediately.

The resulting changes should be added to all appropriate documentation (business continuity, disaster recovery, policy, IT help scripting, training, FAQs, etc.). A follow-up review meeting on these procedures should be scheduled one year after the editing date, to make sure that they stood the test of time.

The key to proper data breach response is to avoid hesitation, maintain standards, keep a cool head, and bring in the help that you require. Follow internal processes, make the changes that need to be made, and test the results thoroughly.

Will is a former Silicon Valley sysadmin and award-winning non-functional tester. After 20+ years in tech, he decided to share his experience with the world as a writer. His recent work involves documenting government hacking methods while probing the current state of privacy and security on the Internet.

Chapter 14: IoT Hacks

Dive into the unsettling world of government-controlled GPS tracking!

Trash Talk: How your garbage can be exploited by hackers, law enforcement, and government agencies

It’s time to uncover how government surveillance gets personal.

Discover the world's #1 privacy solution

Coming soon

Coming soon

Coming soon